Autonomy Solution

Autonomy Solution

Is (or was) there life beyond Earth? The answer to this question lies underground on planetary bodies in our solar system. Planetary subsurface voids are one of the most likely places to find signs of life (both extinct and extant). Subsurface voids are also one of the main candidates for future human colonization beyond Earth. To this end, TEAM CoSTAR is participating in the DARPA Subterranean Challenge to develop fully autonomous systems to explore subsurface voids with a dual focus on planetary exploration and terrestrial applications in search and rescue, mining industry, and AI/Autonomy in extreme environments.

To address various technical challenges across multiple domains in autonomous exploration of extreme environments, we develop a unified modular software system, called NeBula (Networked Belief-aware Perceptual Autonomy). JPL’s NeBula is specifically designed to address stochasticity and uncertainty in various elements of the mission, including sensing, environment, motion, system health, communication, among others. NeBula has been implemented on multiple heterogeneous robotic platforms (wheeled, legged, tracked and flying vehicles), was demonstrated across various terrestrial or planetary-analogue missions, and has won a DARPA Challenge focused on robotic autonomy.

Nebula develops an autonomy architecture that translates the mission specifications into single- or multi-robot behaviors. NeBula quantifies risk and trust in this process by taking uncertainty in robot motion, control, sensing, and environment into account when abstracting activities and behaviors. As a result it provides quantitative guarantees on the performance of the autonomy framework under environment assumptions.

Nebula focuses on a modular design to enable adaptation to various mobility platforms (legged, flying, wheeled, and tracked) and various computational capacities.

Nebula develops a GPS-free navigation solution resilient to perceptually-challenging conditions such as variable illumination, dust, dark, smoke, and fog. The solution relies on degeneracy-aware fusion of various complementary sensing modalities, including vision, IMU, lidar, radar, contact sensors, and ranging systems (e.g., magneto-quasi static signals and UWBs). The system can autonomously switch between and fuse different sensing modalities based on the environmental features.

Nebula develops GPS-denied large-scale (several Km+) SLAM solvers and 3D mapping frameworks using confidence-rich mapping methods to provide precise topological, semantic-based, and geometrical maps of the extreme environments such as subsurface caves and mine networks under variable and challenging illumination conditions.

NeBula develops solutions that have enabled robots to autonomously traverse extreme terrains with various traversability-stressing elements such as loose and slippery surfaces (sand, water), muddy terrains, rock-laden terrains, high-slope areas, and autonomously go up and down stairs in terrestrial applications.

Nebula by design can be implemented on multi-robot systems to enable faster and more efficient missions. Robots can also deploy static radios to create a wireless mesh network backbone. For inter-robot communication, this relies on resilient mesh networking solutions that can accommodate intermittent communication links between robots.

Nebula applies and extends reinforcement learning and in general machine learning methods to enable fast and safe robot motions in perceptually-degraded environments.

Networked control of a multi-robot system: Nebula focuses on a modular design to enable adaptation to various mobility platforms (legged, flying, wheeled, and tracked) and various computational capacities. It is designed to autonomously coordinate and allocate tasks among a team robots with heterogeneous capabilities. It dynamically maps robot capabilities to their roles during the operation.

Legged and multi-limbed robots to handle extreme terrains. Learn more about Nebula-Spot

Wheeled rovers for traversing relatively even surfaces. Learn more about Nebula-Husky

Tracked robots with controllable flippers.

Rollocopter, which ability to roll or fly given the mission state. Learn more about Rollocopter

NASA’s BRAILLE (Biologic and Resource Analog Investigations in Low Light Environments) Project aims to investigate & quantify the geologic and biologic diversity in lava tube caves, while developing strategies for their exploration in a multitude of scenarios.

NeBula-powered autonomous robots create new operational paradigms for the science team in remote detection activities hosted at Lava Beds National Monument, one of the largest collections of Mars-like lava tubes in North America. These exercises help NASA prepare for future missions to caves on Mars and other rocky bodies, as the agency continues its ongoing hunt for life in the universe.

In support of the Artemis program’s vision and leveraging Commercial Lunar Payload Services (CLPS) timeline, our team is studying how NeBula can empower next generation robots to enable extreme and long-range lunar terrain traversability, construction of these future colonial outposts, and scout regions of the Moon (surface and subsurface) that possess resources, such as reliable sources of water, oxygen and construction materials.

In addition to enabling a capable and fully autonomous squad of versatile robots, NeBula develops intuitive human-robot interfaces that can be used by Astronauts to facilitate various activities such as carrying payloads or exploring areas that would pose a danger to the crew.

For related details of NeBula deployment in support of R&D efforts and space missions targeting our moon, Europa, Enceladus, and other bodies, see here.

The DARPA Subterranean or “SubT” Challenge is a robotic competition that seeks novel approaches to rapidly map, navigate, and search underground environments. The competition spans a period of three years. CoSTAR is a DARPA-funded team participating in the systems track developing and implementing physical systems that will be tasked with the traversal, mapping, and search in various subterranean environments: including natural caves, mines, and urban underground.

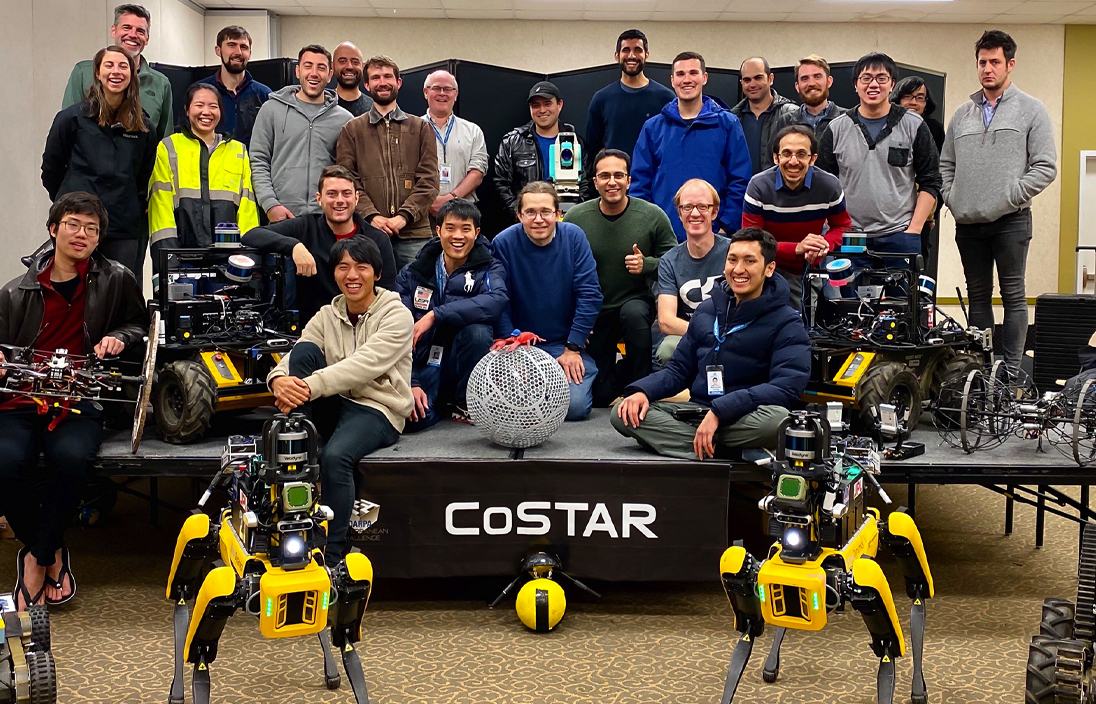

TEAM CoSTAR [Collaborative SubTerranean Autonomous Resilient Robots] is a collaboration between NASA’s JPL, MIT, Caltech, KAIST, LTU, and several industry partners (see below). TEAM CoSTAR with more than 60 key members aims at revolutionizing how we operate in the underground domains and subsurface voids for both terrestrial and planetary applications.

Interested in joining the team? Please contact us at subt.partnership@jpl.nasa.gov for more details on how to sponsor and support the team.

Below are several papers describing recent results from the NeBula framework:

Below is a list of papers describing recent results from the NeBula framework and papers that the NeBula framework is built on: